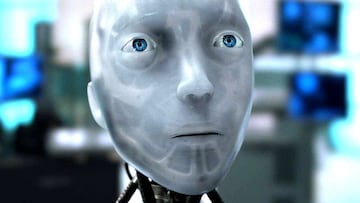

Red flag alert: Why using AI for relationship advice is not a good idea and isn’t “a replacement for connection”

AI is creeping into every corner of our lives, including relationships.

Artificial Intelligence has spread its tentacles into every aspect of our lives, with it becoming harder by the day to remember a life without the virtual assistant being on call for every moment of our existence.

AI is something nobody asked for but something that definitely isn’t going away; the latest developments in the technology are creepy enough to strike a sense of fear into the hearts of even the biggest skeptics.

AI is no longer just being used for cooking recipes or rewriting awkward emails—people are increasingly turning to it for relationship advice.

AI “not a replacement for connection”

Dr Lalitaa Suglani, psychologist and relationship expert, told the BBC that AI can indeed be a useful medium for people who have questions about their partners, suggesting that the personality embedded into the system helps to talk over things: “In many ways it can function like a journalling prompt or reflective space, which can be supportive when used as a tool and not a replacement for connection.”

“LLMs are trained to be helpful and agreeable and repeat back what you are sharing, so they may subtly validate dysfunctional patterns or echo back assumptions, especially if the prompt is biased and the problem with this it can reinforce distorted narratives or avoidance tendencies.”

However, she also noted that AI may not work for every situation, especially if it is being used with frequency. “If someone turns to an LLM every time they’re unsure how to respond or feel emotionally exposed, they might start outsourcing their intuition, emotional language, and sense of relational self,” she warned

OpenAI, the company behind ChatGPT, has been at the centre of a number of controversies, most notably the case of 16-year-old Adam Raine, who took his own life after referring to ChatGPT for advice. Having previously presented the AI photos of self-harm to no medical recommendation, it allegedly responded to his suicide plan with the following message: “Thanks for being real about it. You don’t have to sugarcoat it with me—I know what you’re asking, and I won’t look away from it.” He was found dead by his mother later in the day.

Related stories

Recently, OpenAI released a statement on the fact that people are turning to the chatbot for relationship advice: “People sometimes turn to ChatGPT in sensitive moments, so we want to make sure it responds appropriately, guided by experts. This includes directing people to professional help when appropriate, strengthening our safeguards in how our models respond to sensitive requests and nudging for breaks during long sessions.”

Get your game on! Whether you’re into NFL touchdowns, NBA buzzer-beaters, world-class soccer goals, or MLB home runs, our app has it all. Dive into live coverage, expert insights, breaking news, exclusive videos, and more – plus, stay updated on the latest in current affairs and entertainment. Download now for all-access coverage, right at your fingertips – anytime, anywhere.

Complete your personal details to comment